Page speed really does matter

At Cucumbertown we use different strategies to ensure page loads in 2 second range. At the most under 3 seconds. And we are fanatical about this.

Naturally we have quite a few alerts if it hits upper thresholds of 3 seconds plus.

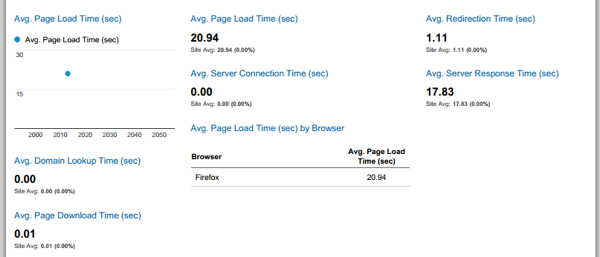

Couple of days before Chris Zacharias wrote about how page weight matters and how YouTube dealt with this. So when a mails pop up in your inbox with this alert from Google Analytics with over 20 seconds in page load, you stop everything to find what’s happening.

Usually a delay in page load is often picked up immediately after a production push mostly by random testing or from our highly engaged users. But this didn’t happen and the alert came a day later.

That freaks you out. Unknown problems for which you haven’t yet figured out root cause IMO is greater danger than a big bug where you know the issue.

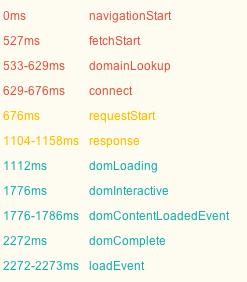

We started probing and that’s when we saw results like this.

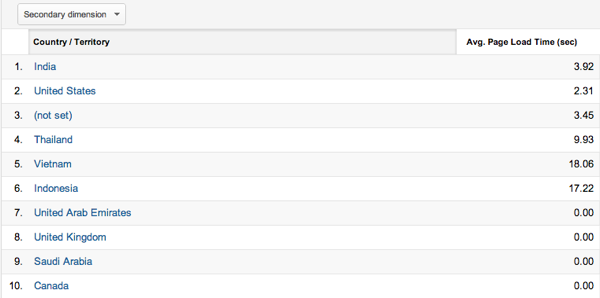

And of course Google Analytics was averaging the time and the average was skewing the results.

Correlating this with other results and the jig saw started fell into place. Cucumbertown was picked up by a food channel in Nigeria and a prominent blogger in Thailand and this was bringing in the crowds. But the page load in these countries as you can see is ridiculously low.

Cucumbertown is an asset heavy website and there is a significant cost associated in loading the basic scripts even thought we delay everything through requireJS and dynamically load JavaScript based on need. Even the basic DOM load is taking time.

Corroborate this with the load time in the US at 2.5 seconds, the speed of light and latency at 43ms for DSL devices across the globe and it’s time to start thinking about CDN’ing assets.

At Zynga we had initially relied on Akamai but later switched to LimeLight and they look like a prime candidate to rely on.

But the recent activity by CloudFlare on HackerNews and the umbrella features they offer seemed tempting to explore. And I took a dip in the water to test CloudFlare.

Right now this blog is served via CloudFlare off a free plan that includes CDNing the assets. The experience is good when there is a rush of requests but if the site is not accessed consistently then it seems like the cache gets evicted. The page load is worse when there is a first time hit after inactivity. But then the follow up requests page loads at 1.5-2 seconds.

I have always thought of CDN’s as an enterprisy expensive word. But here we are; a startup serving the globe and this solution looks imperative.

Do you have any advice? How’s your experience been?